Re-thinking evaluation in education and social-sector organizations

Often, evaluations feel sterile, bureaucratic and punitive. But we believe this need not be the case. We constantly evaluate, asking “how things are going” for us and those we care about. But when it comes to formal evaluation, things seem to have gone astray. There are several reasons why evaluation has been questioned. Understanding these reasons helps us overcome the limitations of current models.

The methods mismatch

There has long been a mismatch between the nature of the work social-sector professionals like educators undertake, and how their efforts are evaluated. Typically, an “input-output” model of education is assumed (this is how computer programming works: if x, then y). There are many reasons why this model is ill suited to guide evaluation of professional learning or an intervention in a school, but the essence is that it ignores what lies between the x and the y: the person.

For evaluation to be both valid and helpful, its method must be suited to the nature of what is being evaluated.

People and the organizations they work in are relational, developmental, and dynamic. What happens in a classroom or a school or a professional learning session is contingent on the knowledge, abilities, feelings, beliefs and motivations of the individuals involved. People constantly evaluate what they are doing and their relationships with those they work, and this influences what they do.

By its nature, the “input-output” model downplays the importance of relationships and people's feelings, and the purposes people bring to their work. It tends to treat people as passive, which is demeaning: this is one reason why systematic evaluations can feel foreign, or even threatening.

We need methods sensitive to the fact that people are evaluative beings for whom the world and their relationship to it is of concern to them.

Additionally, it is not enough to know “if” something made a difference. To judge the results of our efforts, we need to understand how and why they came to be. Only then can we replicate positive outcomes, and build on them. Knowing “how” and “why” we "got what we got" means taking seriously the role of people in generating these outcomes.

Finally, the “input-output” model downplays the significance of context. Context is not only the specific demographic makeup of an organization, but also the unique history and culture of the organization, and the community and region served. Just as master teachers “get to know" students, effective evaluators “get to know” what and who is being evaluated. For these reasons, we advocate for a context-sensitive, person-centered approach to evaluation.

What does it mean to be “person centered”?

Evaluators and policy makers tend to focus on average differences, while practitioners like educators look for growth in individuals. There is a reason why individuals, and not groups, receive grades. Our approach acknowledges this person-centered reality, and as a result, we produce more valid and reliable evaluations than those that only compare group means.

Averages or persons?

There is a growing concern about the over reliance on the mean, or average, among researchers. (In addition to the article cited below, see this article on "singular science".) We believe this over reliance on means distorts our evaluations of programs, organizations, schools and more.

Let us take a simple example. A school district has implemented a new restorative practices initiative, piloting it at one of its middle schools. Everyone wants to know if the program is reducing student misconduct. Typically, the average incidence of student misconduct at the middle school that implemented the program is compared to the average incidence of student misconduct at the middle school that did not pilot the initiative. Then, these two average are compared, and it is determined whether or not the average differences are “statistically significant.”

But isn’t the point to examine the effect of restorative practices on individual students? The more individuals the program helps, the better the program. Persons, not averages, are where “program effect” will be found.

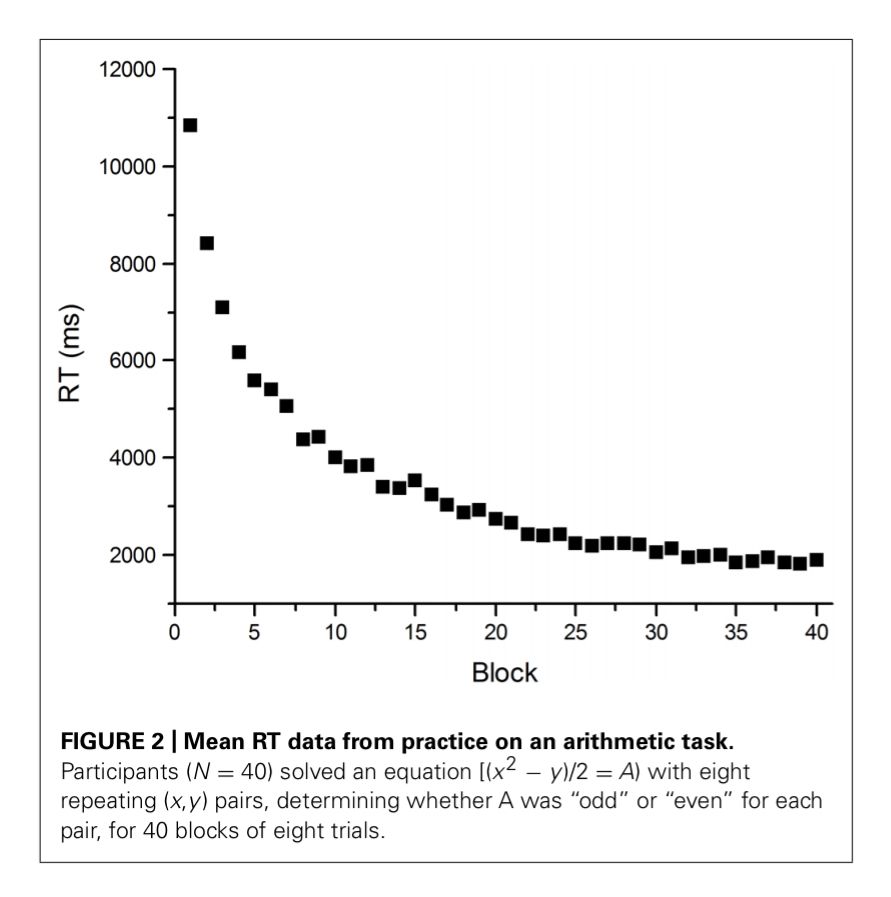

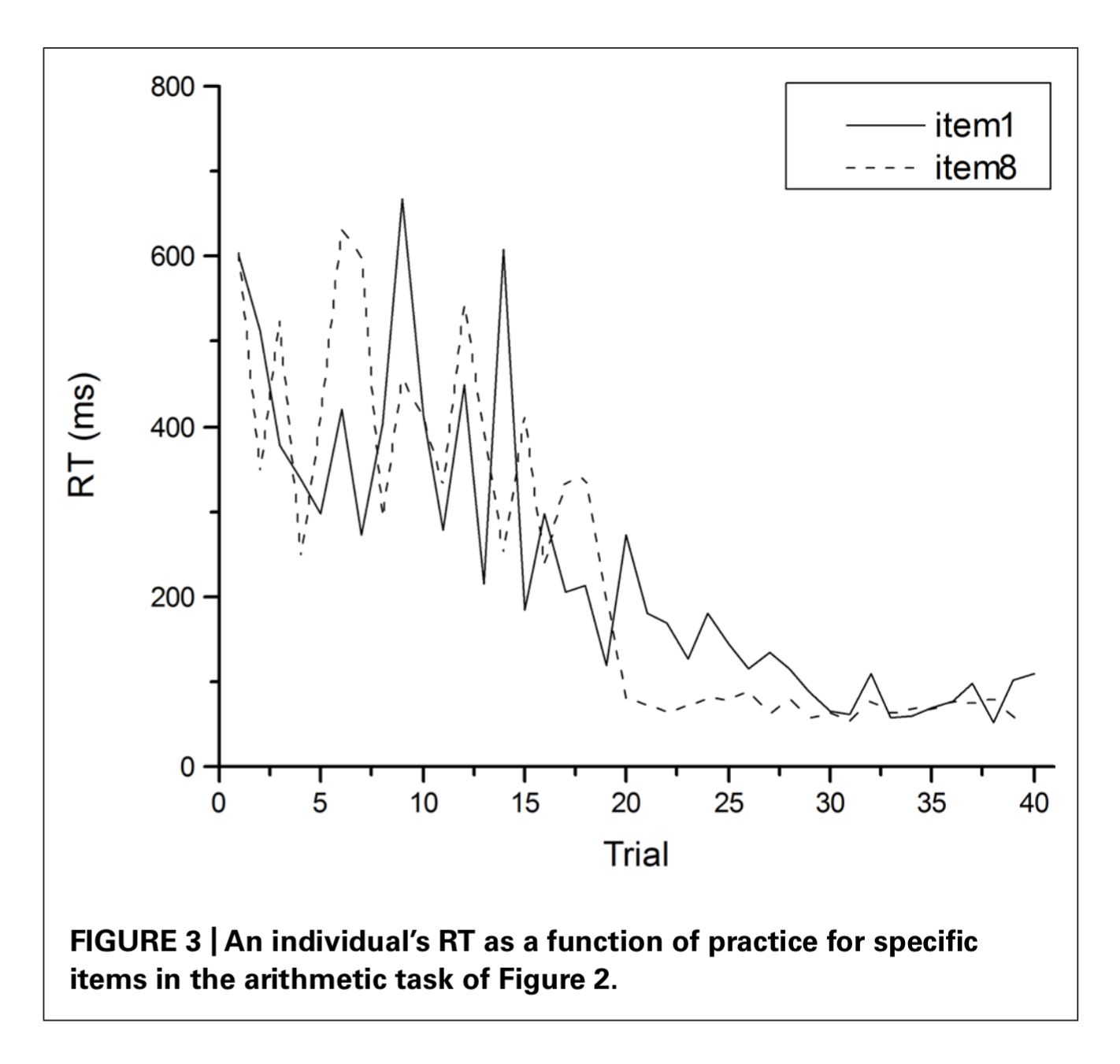

In fact, we now know that patterns observed in averaged data do not necessarily predict or explain what happens at the individual level. A now classic example is the “power law of learning.” This “law” states that the time it takes to perform a task decreases with the number of repetitions of that task and this decrease follows the shape of a “power law.”

Yet, if individual data is inspected, these “learning curves” are rarely observed. As the chart below shows, while people do tend to get faster with practice, their performance from one task attempt to the next task attempt doesn’t look like a power law.

An observation oriented modeling approach

Using an advanced technique known as Observation Oriented Modeling, we are able to examine the effect of a professional learning initiative, or behavioral or instructional intervention, without using averages. We can ask how many individuals are positively effected, and to what degree the observed relationship is due to chance.

Continuing with our restorative practices example, we would report findings like this:

- 140 out of 200 middle school students (7 out of 10) taught restorative practices showed a decline in discipline referrals, while only 80 out of 200 middle school students (4 out of 10) not taught restorative practices showed a decline.

- Only 1 out of 1000 times was this pattern obtainable when the data were randomized. This means the pattern was not a chance event.

By staying at the level of the individual student, the practical effect of the intiative is easy to see. Of course, we would explore differences in response to the initiative by various student characteristics, and we'd also study select individual students over time (similar to the "singular science" article above). We would also explore what other differences might exist between the two schools that might explain this pattern.

What "works"? Or what works for us?

While traditional data analysis techniques look to generalize findings from, say, one school (a sample) to all schools (a population), the aim of our approach is help educators determine what works for them, in their context. We are in practice less concerned with “what works” in some abstract way, and more concerned with what works for us – with our students, and our community.

Finally, Observation Oriented Modeling emphasizes the use of “integrated models” to develop our thinking about how things work, and what is needed to improve outcomes. Integrated models help practitioners develop person-centered depictions of how context and relationships, and individual abilities, characteristics, and intentions, all contribute to outcomes.

Keeping a focus on context

The results of decades of various school reform efforts and other popular policy initiatives are not terribly impressive. What appeared to work in one place doesn’t seem to work in another place. This is why our approach emphasizes the importance of context for understanding and explaining outcomes. Most organizations that serve the public are "open systems," meaning they do not nor can they organize themselves as experimental scientists would have it, where everything is controlled except that which is being manipulated (that would be a "closed system"). Practically, our context-focused evaluation helps us discover how:

- different causes can have the same effect (e.g., similar test score declines can be caused by a hurricane or demogrhapic shifts);

- different effects can have the same cause (e.g., an influx of new teachers can improve morale in one district, while making it worse in another).

To evaluate a program, intiative or professional learning effort, we need to know not only if something made a difference, but how and why. Often, the understanding of those involved is required. To unearth these dynamics we conduct interviews, focus groups, and document reviews. This makes our evaluations all-sided. As a result, we are able to offer more definitive recommendations.

Reports that you can actually use

We pride ourselves in tailoring our evaluation services to client needs, aims and resources. Our reports focus on what matters most. They are targeted and brief so that organizations put their precious time into taking action. Give us a call to see how our approach can help your organization!